aiacademy: 機器學習 Unsupervised Learning

Tags: aiacademy, dimensionality-reduction, machine-learning, unsupervised-learning

Unsupervised Learning

-

Outline

- Unsupervised learning

-

Dimension reduction

- Principal component analysis (PCA)

- T-Distributed Stochastic Neighbor Embedding (t-SNE)

-

Clustering

- K-means

- Hierarchical clustering

Dimension Reduction

跟 Coursera 的 Data Compression 一樣

-

Why dimension reduction ?

- compress data preserve useful information

- Data visulization

Principlal Component analysis (PCA)

先作筆記,等看到 coursera 的時候再來複習!!!

- PCA

- 把高維度的點,投影到低維度上面,且希望在低維度空間中保有在高維度中的性質!

PCA in general

- Perform PCA by computing the eigenvectors of the k largest eigenvalues of the covariance matrix

PCA on MNIST

T-SNE (T-distributed Stochastic Neighbor Embedding)

-

Goal: find locations in low dimensions such that the distance between points are preserved

-

T-SNE allows non-linear transforms from the original data point to the new data point

T-SNE

Why different silmilarity measures?

- Crowding problem: distance between distant data points in the low dimension will not be large enough by SNE, which uses the same similarity measure for both low and high dimensional space

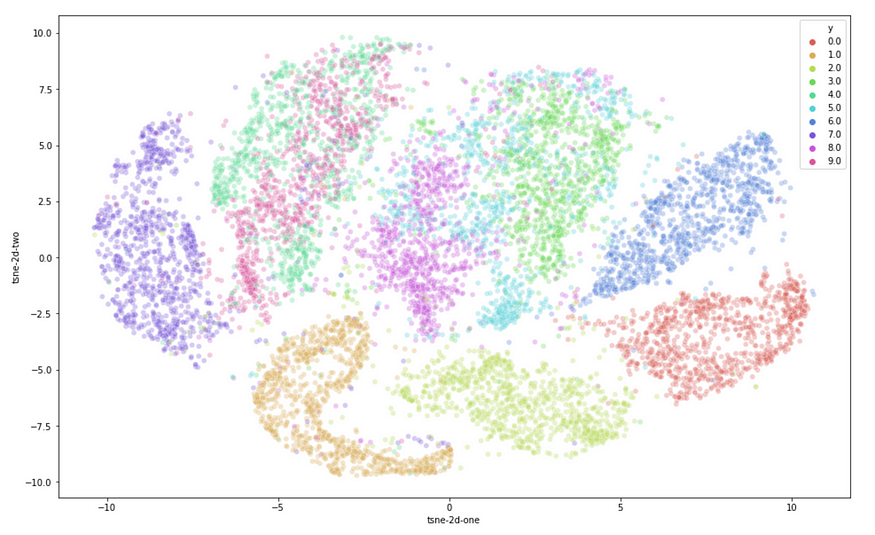

T-SNE on MNIST

Summary

-

Both PCA and t-SNE project data points into low dimension

- PCA allows only linear projection

- T-SNE allows non-linear projection

-

Adv of PCA

- Interpretability

- Can project new data points

-

Adv of t-SNE

- Visualization

Clustering

Hierarchical clustering

-

Types of hierarchical clustering

- Agglomerative (bottom-up)

- Start with each data point as a cluster

- Merge two closest clusters until only one cluster left

- Divisive (top-down)

- Start with one cluster

- Each step split a cluster until each cluster contains one data point

- Agglomerative (bottom-up)

Agglomerateive example

Different cluster result

Summary

- Clustering

- k-means and hierachical clustering