aiacademy: 自然語言處理 NLP 3.2 Sequence Encoding & Attention

Tags: aiacademy, attention, nlp, sequence-encoding

Sequence Encoding Basic Attention

Representations of Variable Length Data

- Input: word sequence, image pixels, audio signal, click logs

- Propery: continuity, temporal, importance distribution

- Example

- Basic combination: average, sum

- Neural combination: network architecurees should consider input domain prperties

- CNN (convolutional neural network)

- RNN (recurrent neurlal network): temporal information

Network architectures should consider the input domain properties

Recurrent Neural Networks (RNN)

-

Learning variable-length representations

- Fit for sentences and sequences ofvalues

-

Sequential computation makes parallelization difficult

-

No explicit modeling of long and short range dependenceies

-

Convolutional neural Networks

-

Easy to parallelize

- Exploit local dependencies

- Long-distance dependencies require many layers

-

Attention

- Encoder-decoder model is important in NMT

- RNNs need attention mechanism to handle long dependencies

-

Attention allows us to access any state

-

Machine Translation with Attention

- query: 拿進來去算 match 程度的資訊 z

- key: 拿過來被算的 vectors 叫 key

- 拿 query 在 key 上面做 match 程度

- key 用來算 α

- value: 可以和key是不一樣的,value 是用來做 weighted sum 的

Dot-Product Attention

- Input: a query q and a set of key-value (k-v) pairs to an output

- Output: weighted sum of values

Dot-Product Attention in Matirx

- Input: multiple queries q and a set of key-value (k-v) pairs to an output

- Output: a set of weighted sum of values

Sequence Encoding Self-Attention

Attention

- Encoder-decoder modle is important in NMT

- RNNs need attention mechanism to handle long dependencies

- Attention allows us to access any state

Using attention to replace recurrence architectures

Self-Attention

-

Constant “path length” between two positions

-

Easy to parallelize

Transformoer Idea

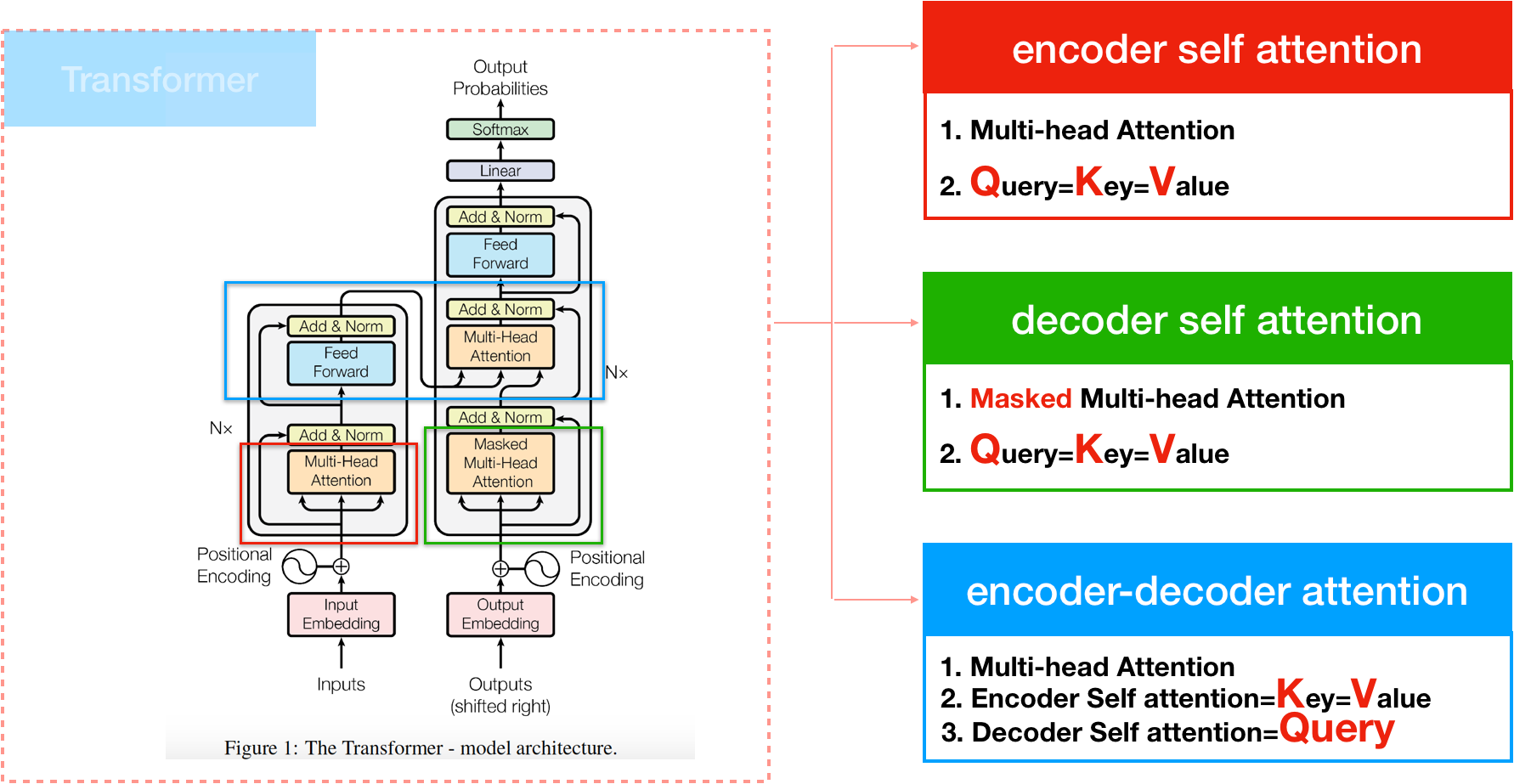

Encoder Self-Attention

Decoder Self-Attention

Sequence Encoding Multi Head Attention

Convolution

Self-Attention

Attention Head: who

Comparision

Sequence Encoding Transformer

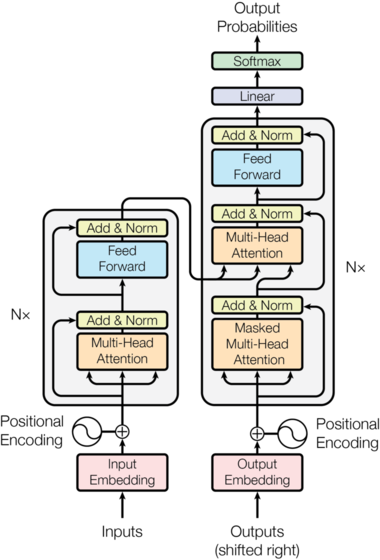

Transformer Overview

- Non-recurrent encoder-decoder for MT

- PyTorch explanation by Sasha Rush

- 一定要自己看一次,超棒棒~

- http://nlp.seas.harvard.edu/2018/04/03/attention.html

Multi-Head Attention

Scaled Dot-Product Attention

Transformer Encoder Block

Encoder Input

Multi-Head Attention Details

Training Tips

- Byte-pair encodings (BPE)

- Checkpoint averaging

- ADAM optimizer with learning rate changes

- Dropout during trainiing at every layer just before adding residual

- Label smoothing

- Auto-regressive decoding with beam search and length penalties

ML Experiments

Parsing Experiments

Condluding Remarks

| Non-recurrence model is easy to paralleize |  |

| Multi-head attention captures different aspects by interacting between words | |

| Positional encoding captures location information | |

| Each transformer block can be applied to diverse tasks |